EU AI Act

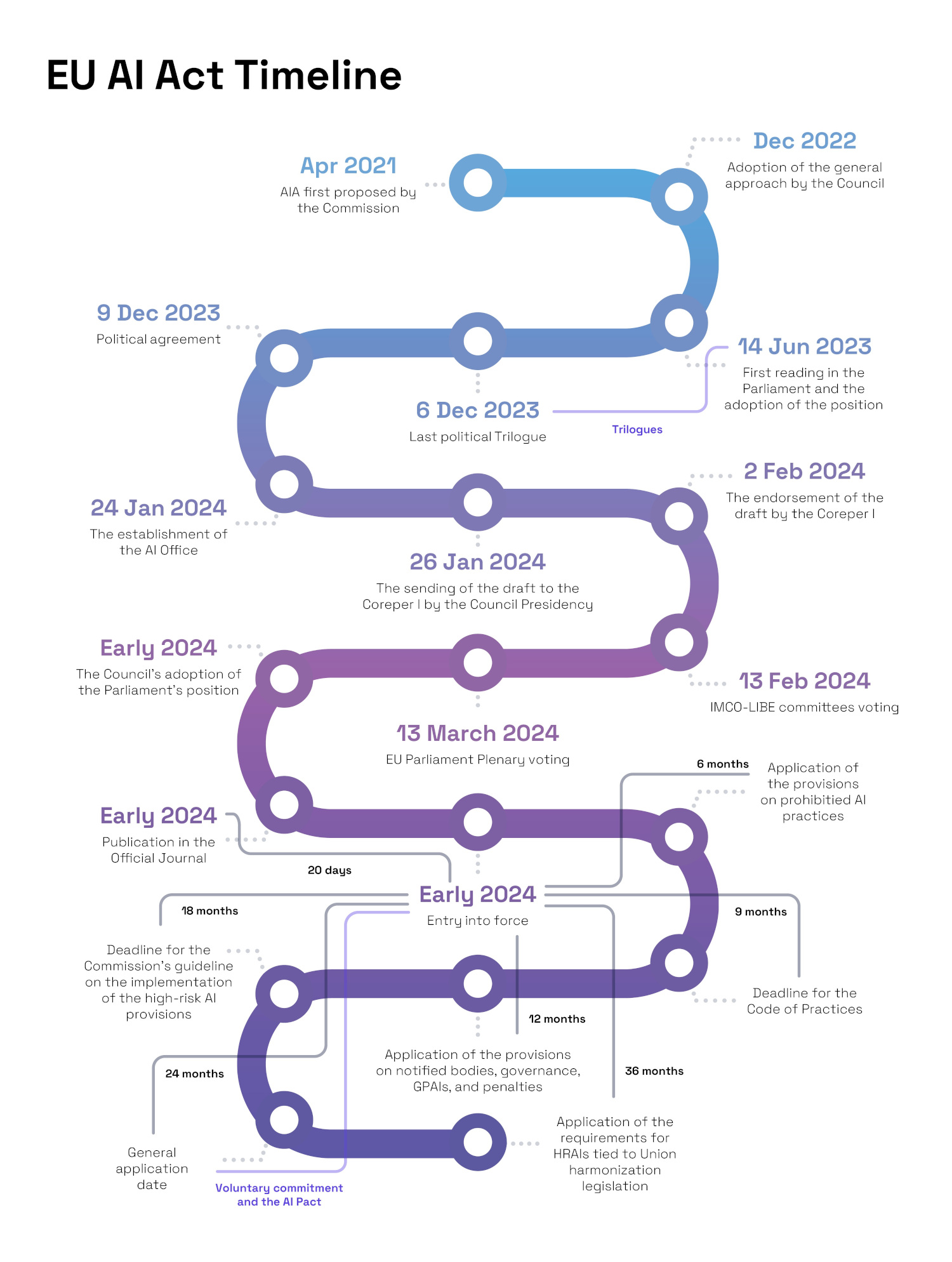

The EU AI Act, proposed by the European Commission in April 2021, aims to regulate the use of AI in the EU by protecting users from AI-related harm and prioritizing human rights.

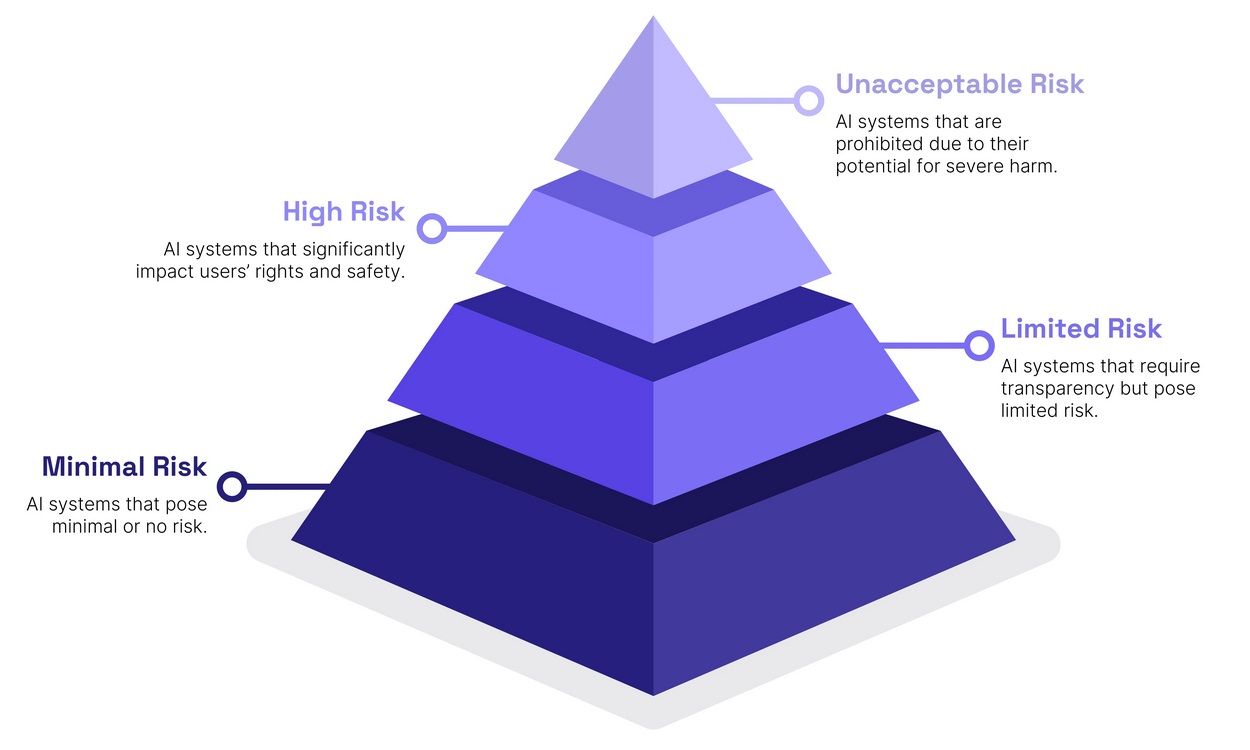

Using a risk-based approach, the EU AI Act imposes obligations that are proportional to the risk posed by an AI system or a general purpose AI model.

After a lengthy consultation process that saw several amendments proposed, it passed in the European Parliament in June 2023, marking the start of a six-month Trilogue period.

At the end of this process, a provisional agreement was reached in December 2023, before the Coreper I (Committee of the Permanent Representatives) reached a political agreement in February 2024.

The European Parliament AI Act was voted and approved on 13 March 2024.

This landmark legislation is set to become the global gold standard for AI legislation and will have important implications for organizations both within and outside of the EU due to its extraterrestrial scope.

Core Objectives and Principles

The core objectives of the EU AI Act are to:

- Ensure Safety and Transparency: Establish guidelines that ensure AI systems are safe and transparent.

- Protect Fundamental Rights: Safeguard the rights of EU citizens by regulating AI use.

- Foster Trust in AI: Build an ecosystem of trust to encourage the adoption of AI technologies.

These principles aim to create a balanced approach that promotes innovation while protecting users.

Key Provisions and Their Implications for Businesses

The EU AI Act includes several key provisions that businesses must adhere to:

- Risk Management: Implement comprehensive risk management systems.

- Data Governance: Maintain high standards for data quality and governance.

- Transparency Requirements: Ensure transparency in AI operations and communications.

- Human Oversight: Implement mechanisms for human oversight of AI systems.

Implications for Businesses

- Compliance Costs: Businesses may incur costs for implementing necessary changes.

- Operational Changes: Adjustments to AI development and deployment processes.

- Legal Accountability: Increased legal obligations and potential penalties for non-compliance.

| Risk Level | Examples |

|---|---|

| Minimal Risk | Spam filters, AI-enabled video games |

| Limited Risk | Chatbots, deepfakes |

| High Risk | AI in healthcare, employment screening tools |

| Unacceptable Risk | Social scoring systems, real-time biometric ID |

...

Specific Requirements for Each Risk Level

Minimal Risk:

- No specific requirements under the Act.

- Must comply with existing legislation.

Limited Risk:

- Transparency: Users must be informed they are interacting with an AI system.

- Examples: AI systems inferring characteristics or emotions, content generated using AI.

High Risk:

- Risk Management: Continuous risk identification and mitigation.

- Data Governance: Ensure high-quality, representative data.

- Technical Documentation: Maintain detailed documentation for compliance.

- Human Oversight: Implement human oversight mechanisms.

- Transparency: Clear instructions and information for users.

Unacceptable Risk:

- Prohibition: These systems are banned from use and sale in the EU.

- Examples: AI systems manipulating individuals without consent, systems enabling social scoring

Impact on Enterprises - Who Needs to Comply ?

The EU AI Act affects a broad range of entities involved in the AI lifecycle.

These include:

- Providers: Developers or entities placing AI systems on the market.

- Deployers: Organizations using AI systems.

- Importers: Entities bringing AI systems into the EU market.

- Distributors: Parties making AI systems available within the EU.

The Act has an extraterritorial scope, meaning it applies not only to EU-based entities but also to companies outside the EU if their AI systems interact with EU residents. This broad reach ensures comprehensive compliance across the global AI ecosystem.

Key Obligations for Each Type of Entity

Providers:

- Ensure compliance with all technical and regulatory standards.

- Maintain up-to-date technical documentation.

- Implement risk management and data governance practices.

Deployers:

- Use AI systems according to instructions.

- Ensure human oversight and transparency.

- Monitor AI system operations and report issues.

Importers:

- Verify compliance of AI systems before importing.

- Ensure accurate documentation and labeling.

- Cooperate with regulatory bodies.

Distributors:

- Confirm AI systems meet EU standards.

- Provide necessary information to deployers.

- Support compliance and monitoring efforts.

Enhancing Transparency and Human Oversight

Transparency and human oversight are key to building trust and ensuring compliance.

Ensure clear communication about AI system usage to all stakeholders.

Implement training and competency requirements for human oversight to ensure personnel can effectively monitor AI systems.

Maintain transparency in AI operations by providing clear instructions and information about AI capabilities and limitations.

Clear Communication:

- Inform users they are interacting with an AI system.

- Provide detailed instructions and capabilities.

Training Requirements:

- Regular training sessions for staff.

- Certification programs for AI oversight.

Operational Transparency:

- Regular updates and reports on AI system performance.

- Open channels for feedback and concerns.

FAQS

Who needs to prepare for the EU AI Act assessment ?

The Act applies to providers, deployers, distributors, and importers of AI systems that are placed on the market or put in service within the European Union. The level of preparedness required under the Act is different for each operator. For providers and deployers, the Act may also apply extraterritorially, meaning that providers and deployers of AI system may need to prepare for the EU AI Act even if they are based outside the European Union.

What are the key requirements for high-risk AI systems in the EU AI Act ?

There are seven key design-related requirements for the high-risk AI systems under the EU AI Act:

- Establishment of a risk management system

- Maintaining appropriate data governance and management practices

- Drawing up a technical documentation

- Record-keeping

- Ensuring transparency and the provision of information to deployers

- Maintaining appropriate level of human oversight

- Ensuring appropriate level of accuracy, robustness, and cybersecurity

What are the potential consequences of non-compliance with the EU AI Act ?

Non-compliance with the provisions of the EU AI Act sanctioned with hefty administrative fines.

...